ITRex Group has recently helped a fitness tech startup create a

fitness mirror powered by artificial intelligence and human pose estimation. We sat down to talk to Kirill Stashevsky, the ITRex CTO, to discuss the specifics of implementing human pose estimation technology that contribute a lot to the project's success but are often overlooked.

— How does one embark on the HPE implementation journey to ensure they produce a top-notch solution? What should one beware of during project planning to secure that further development efforts are headed in the right direction?

Kirill: Whether you craft a winning human pose estimation product depends largely on the decisions you make at the very beginning of the project. One such decision is selecting the optimum implementation strategy — one may choose to develop a solution from scratch or rely on one of the many human pose estimation libraries.

To choose the best-fitting approach, you need to clearly understand, among other issues, what exactly you aim to achieve with your future product, which platforms it will run on, and how much time you have until releasing your product to the market. Once you've clarified the vision, weigh it against the available strategies.

Consider going the custom route if the task you are solving is narrow and non-trivial and requires the ultimate accuracy of human pose estimation. Keep in mind, however, that the development process is likely to be time- and effort-intensive.

In turn, if you are developing a product with a mass-market appeal or a product that caters to a typical use case, going for library-based development would help build a quality prototype faster and with lower effort. Still, in many cases, you would have to adjust the given model to your specific use case by further training it on the data that best reflects the reality.

— Suppose I decide to go for library-based development; what factors should I consider to choose the fitting one?

Kirill: You may go for a proprietary or an open-source library. Proprietary libraries could provide for more accurate pose estimation and require less customization. But you have to prepare a backup plan in case an owner, say, discontinues the library support.

Open-source libraries, in turn, often require more effort to configure. But with an experienced team, it may be an optimum option balancing the quality of recognition, moderate development costs, and fair time-to-market.

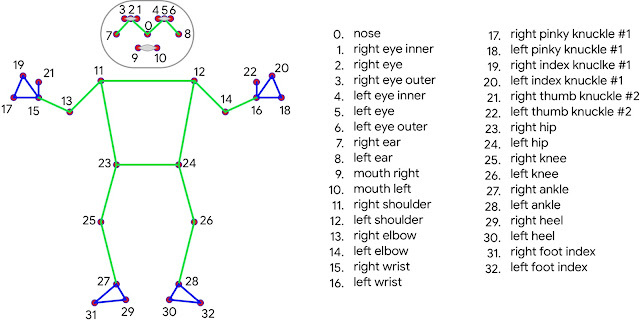

Pay attention to the number of keypoints a library is able to recognize, too. A solution for dancers or yogis, for instance, may require identifying additional keypoints for hands and feet so that BlazePose might look like a more reasonable option. If latency is critical, select the library that runs at an FPS rate of 30 and higher, for example, MoveNet.

— Why aren't the standard datasets lying at the base of most models enough for an accurately performing solution? What data should I then use to further train and test the model?

Kirill: A well-performing human pose estimation model should be trained on the data that is authentic and representative of reality. The truth is that even the most expansive datasets often lack diversity and are not enough to yield reliable outcomes in real-life settings. That's what we faced when developing the human pose estimation component for our client's fitness mirror.

To mitigate the issue, we had to retrain the model on additional video footage filmed specifically to reflect the surroundings the mirror will be used in. So, we compiled a custom dataset of videos featuring people of different heights, body types, and skin colors exercising in various settings — from poorly lit rooms to spacious fitness studios — filmed at a particular angle. That helped us significantly increase the accuracy of the model.

— Are there any other easy-to-overlook issues that still influence the accuracy of human pose estimation?

Kirill: Focusing on the innards of deep learning, development teams may fail to pay due attention to the cameras. So, make sure that the features of a camera (including its positioning, frame size, a frame rate, a shooting angle, as well as whether the camera is shooting statically or dynamically) used for filming training data match those of cameras real users might employ.