What is edge AI, and how does it differ from cloud AI?

A standard IoT architecture can be roughly divided into three components: the things, the gateways, and the cloud. The things stand for all kinds of tools, gadgets, and equipment enhanced with sensors generating data. Gateways are centralized devices, say, routers, that connect the things to the cloud. Together, the end devices and the gateways make up the edge layer.

Edge AI, in turn, stands for deploying AI algorithms closer to the edge of the network, that is, either to connected devices (end nodes) or gateways (edge nodes).

In contrast to the cloud-based approach, where AI algorithms are developed and deployed in the cloud, edge-centric AI systems make decisions in a matter of milliseconds and run at a lower cost.

Other benefits of edge AI as compared to cloud AI solutions include:

-

Lower processing time: since the data is analyzed locally, there’s no need to send requests to the cloud and wait for responses, which is of utmost importance for time-critical applications, like medical devices or driver assistance systems

-

Reduced bandwidth and costs: with no need for high-volume sensor data to be sent over to the cloud, edge AI systems require lower bandwidth (used mainly for transferring metadata), hence, incur lower operational costs

-

Increased security: processing data locally helps reduce the risks of sensitive information being compromised in the cloud or while in transit

-

Better reliability: edge AI continues running even in case of network disruptions or cloud services being temporarily unavailable

-

Optimized energy consumption: processing data locally usually takes up less energy than sending the generated data over to the cloud, which helps extend end devices’ battery lifetime

According to Markets and Markets, the global edge AI software market size is expected to reach $1.8 billion by 2026, growing at a CAGR of 20.8%. Various factors, such as increasing enterprise workloads on the cloud and rapid growth in the number of intelligent applications, are expected to drive the adoption of edge AI solutions.

How edge AI works under the hood

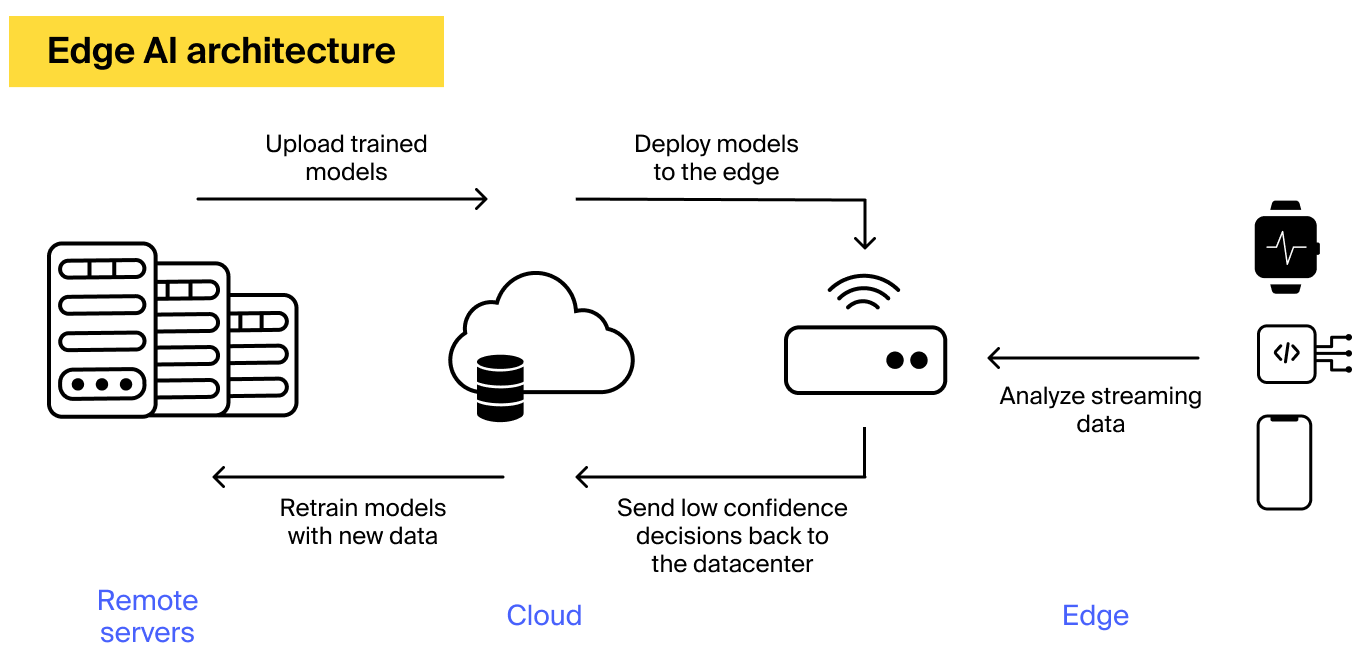

Despite a common misbelief, a standard edge-centered AI solution is usually deployed in a hybrid manner — with edge devices making decisions based on streaming data and a data center (usually, a cloud one) used for revising and retraining the deployed AI models.

So, a basic edge AI architecture typically looks like this:

For edge AI systems to be able to understand human speech, drive vehicles, and carry out other non-trivial tasks, they need human-like intelligence. In these systems, human cognition is replicated with the help of deep learning algorithms, a subset of AI.

The process of training deep learning models often runs in the cloud since achieving higher accuracy calls for huge volumes of data and large processing power. Once trained, deep learning models are deployed to an end or an edge device, where they now run autonomously.

If the model encounters a problem, the feedback is sent over to the cloud where retraining starts until the model at the edge is replaced with a new, more accurate one. This feedback loop allows keeping the edge AI solution precise and effective.

A rundown of hardware and software technologies enabling edge AI

A standard edge AI implementation requires hardware and software components.

Depending on the specific edge AI application, there may be several hardware options for performing edge AI processing. The most common ones span CPUs, GPUs, application-specific integrated circuits (ASICs), and field-programmable gate arrays (FPGAs).

ASICs enable high processing capability while being energy-efficient, which makes them a good fit for a wide array of edge AI applications.

GPUs, in turn, can be quite costly, especially when it comes to supporting a large-scale edge solution. Still, they are the go-to option for latency-critical use cases that require data to be processed at lightning speed, such as driverless cars or advanced driver assistance systems.

FPGAs provide even better processing power, energy efficiency, and flexibility. The key advantage of FPGAs is that they are programmable, that is, the hardware “follows” software instructions. That allows for more power savings and reconfigurability, as one can simply change the nature of the data flow in the hardware as opposed to hard-coded ASICs, CPUs, and GPUs.

All in all, choosing the optimum hardware option for an edge AI solution, one should consider a combination of factors, including reconfigurability, power consumption, size, speed of processing, and costs. Here’s how the popular hardware options compare according to the stated criteria:

In turn, edge AI software includes the full stack of technologies enabling the deep learning process and allowing AI algorithms to run on edge devices. The edge AI software infrastructure spans storage, data management, data analysis/AI inference, and networking components.

Edge AI use cases

Companies across sectors are already benefiting from edge AI. Here’s a rundown of the most prominent edge AI use cases from different industries.

Retail: boosting shopping experience

A positive shopping experience is a major concern for retailers, for it is the factor determining customer retention. With the use of AI-powered analytics, retailers can keep consumers satisfied, making sure they turn into repeat customers.

One of the many edge AI applications aiding retail employees in their daily operations and creating a better customer experience is using edge AI to determine when products need to be replenished and replaced.

Another edge AI application is using computer vision solutions in smart checkout systems that could ultimately free customers from the need to scan their goods at the counter.

Retailers are also using intelligent video analytics to dig into customer preferences and improve store layouts accordingly.

Manufacturing: bringing in a smart factory

Manufacturing enterprises, especially those involved in precision manufacturing, need to ensure the accuracy and safety of the production process. By enhancing manufacturing sites with AI, manufacturers can ensure the shop floor is safe and efficient. For that, they adopt AI applications that carry out shop floor inspections, just like the ones used by Procter & Gamble and BMW.

Procter & Gamble uses an edge AI solution that relies on the footage from inspection cameras to inspect chemical mix tanks. To prevent products with flaws from going down the manufacturing pipeline, the edge AI solution deployed right on the cameras pinpoints imperfections and notifies shop floor managers of the spotted quality deviations.

BMW uses a combination of edge computing and artificial intelligence to get a real-time view of the factory floor. The enterprise gets a clear picture of its assembly line via the smart cameras installed throughout the manufacturing facility.

Automotive: enabling autonomous cars

Autonomous cars and advanced driver assistance systems rely on edge AI for improved safety, enhanced efficiency, and a lowered risk of accidents.

Autonomous cars are equipped with a variety of sensors that collect information about road conditions, pedestrian locations, light levels, driving conditions, objects around the vehicle, and other factors. Due to security concerns, these large volumes of data need to be processed quickly. Edge AI addresses latency-sensitive monitoring tasks, such as object detection, object tracking, and location awareness.

Security: powering facial recognition

One of the areas that is increasingly switching to the edge is facial recognition.

For security apps with facial recognition capabilities, say, a smart home security system, response time is critical. In traditional, cloud-based systems, camera footage is continuously moved around the network, which affects the solution’s processing speed and operating costs.

A more effective approach is processing video data directly on the security cameras. Since no time is needed to transfer the data to the cloud, the application can be more reliable and responsive.

Consumer electronics: enabling new features in mobile devices

Mobile devices generate lots of data. Processing this data in the cloud comes with its share of challenges, such as high latency and bandwidth usage. To overcome these issues, mobile developers have started tuning to edge AI to process the generated data at a higher speed and lower cost.

Mobile use cases enabled by edge AI include speech and face recognition, motion and fall detection, pose estimation, and beyond.

The common approach is still hybrid though. The data that requires more storage or high computing capabilities is sent over to the cloud or the fog layer, while the data that can be interpreted locally stays at the edge.

Barriers to edge AI adoption

Limited computing power

Training AI algorithms requires sufficient computing powers, which are largely unattainable at the edge. So, the majority of edge-centered applications still contain the cloud part, where AI algorithms are trained and updated.

If you are leaning towards building an edge-centered application that relies less on the cloud, you would need to think over the ways of optimizing on-device data storage (for example, only keeping frames featuring a face in face recognition applications) and the AI training process.

Security vulnerabilities

Although the decentralized nature of edge applications and no need for data to travel across the network increases the security features of edge-centered applications, end nodes are still prone to cyber attacks. So, additional security measures are needed to counter security risks.

Machine learning models powering edge solutions, too, can be accessed and tampered with by criminals. Locking them down and treating them as a key asset can help you prevent edge-related security issues.

Loss of data

The very nature of the edge implies that the data may not make it to the cloud for storage. End devices may be configured to discard the generated data in order to cut down operating costs or improve system performance. While cloud settings come with a fair share of limitations, the key advantage of those is the fact that all — or almost all — the generated data is stored, hence, can be used for gleaning insights.

If storing data is necessary for a particular use case, we advise going hybrid and using the cloud to store and analyze usage and other statistical data, just the way we did it when developing a smart fitness mirror for our clients.