What is explainable AI, and why should you care?

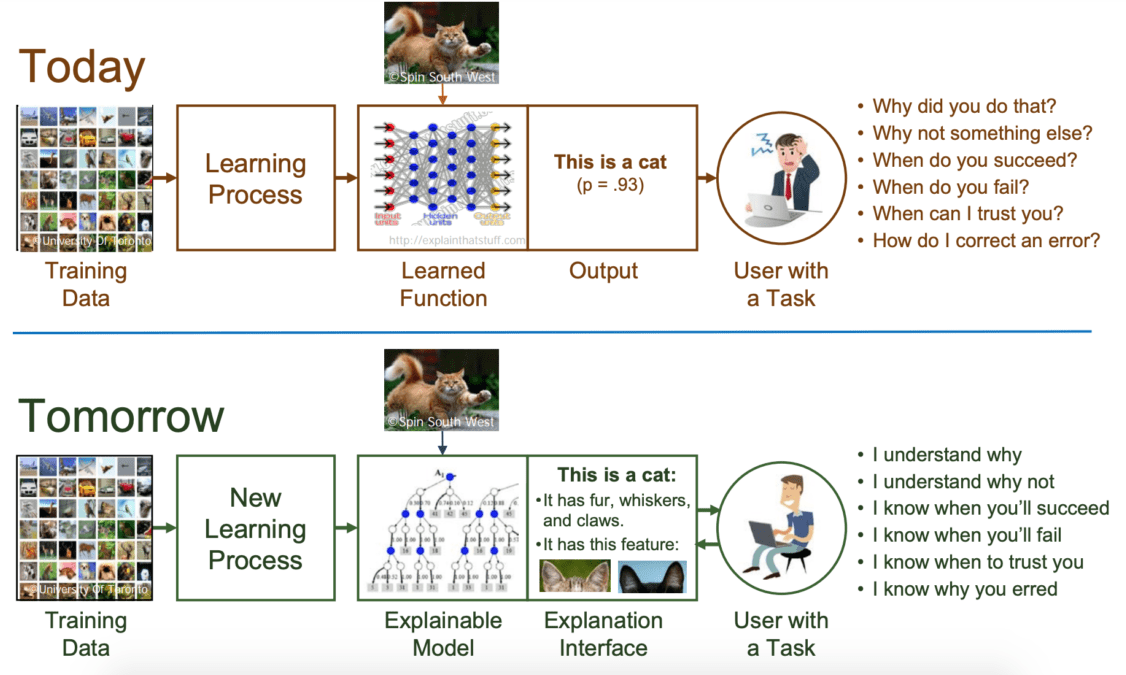

Many people associate AI with mysterious “black box” algorithms that process millions of input data points, produce inexplicable results, and expect users to trust them. These models are created directly from data, and not even their engineers can explain their outcome.

Black box models, such as neural networks, possess superior skills when it comes to challenging prediction tasks. They produce results of remarkable accuracy, but no one can understand how algorithms arrived at their predictions. The famous ChatGPT that many of us use is a black box. The model doesn’t explain its response to your prompt. If it presents something as a fact, it’s up to you to verify its correctness.

In contrast, with explainable “white box” AI, users can understand the rationale behind its decisions, making it increasingly popular in business settings. These models are not as technically impressive as their black box counterparts. Still, their transparency is a tradeoff, as it offers a higher level of reliability and is preferable in highly regulated industries.

MYCIN is a white box AI-powered expert system that can identify which bacteria cause a particular infection and recommend antibiotics accordingly. When presented with an infection, the system produces a ranked list of bacteria. Each entry in this list is accompanied with a probability score and a list of rules that the tool used to rank a given bacteria.

What is explainable AI?

Explainable AI (XAI) refers to a set of techniques, design principles, and processes that help developers and organizations add a layer of transparency to AI algorithms so that they can justify their predictions. XAI can describe AI models, their expected impact, and potential biases. With this technology, human experts can understand the resulting predictions and build trust and confidence in the results.

When speaking of explainability, it all boils down to what you want to explain. There are two possibilities:

-

Explaining AI model’s lineage. How the model was trained, which data was used, which types of bias are possible, and how they can be mitigated.

-

Explaining the overall model. This is also called “model interpretability.” There are two approaches to this technique:

-

Proxy modeling. A more understandable model, such as a decision tree, is used as an approximation of a more cumbersome AI algorithm. Even though this technique gives a simple overview of what to expect, it remains an approximation and can differ from real-life results.

-

Design for interpretability. Designing AI models in a way that forces simple, easy-to-explain behavior. This technique can result in less powerful models, as it eliminates some complicated tools from the developer’s toolkit.

-

Explainable AI principles

The US National Institute of Standards and Technology (NIST) developed four explainable AI principles that organizations need to satisfy for their AI systems to achieve explainability status.

-

Explanation. An AI system should be able to explain its outcome. This principle doesn’t imply the correctness of the results; it just means that AI models can justify their recommendations. Explanations can come in different shapes depending on the target audience—end users, developers, model owners, etc.

-

Meaningful. Models satisfy this explainable AI principle if the target audience understands the explanation and can use it to accomplish their task. According to this principle, the model’s explanation must take into account the audience’s needs and levels of expertise.

-

Explanation accuracy. The justification must be correct, make sense, and contain sufficient details. This principle itself doesn’t imply that the model’s output is accurate.

-

Knowledge limits. With this explainable AI principle, systems operate within their designated knowledge limit and can flag cases that they weren’t meant to resolve or where their output is unreliable.

Explainable AI example

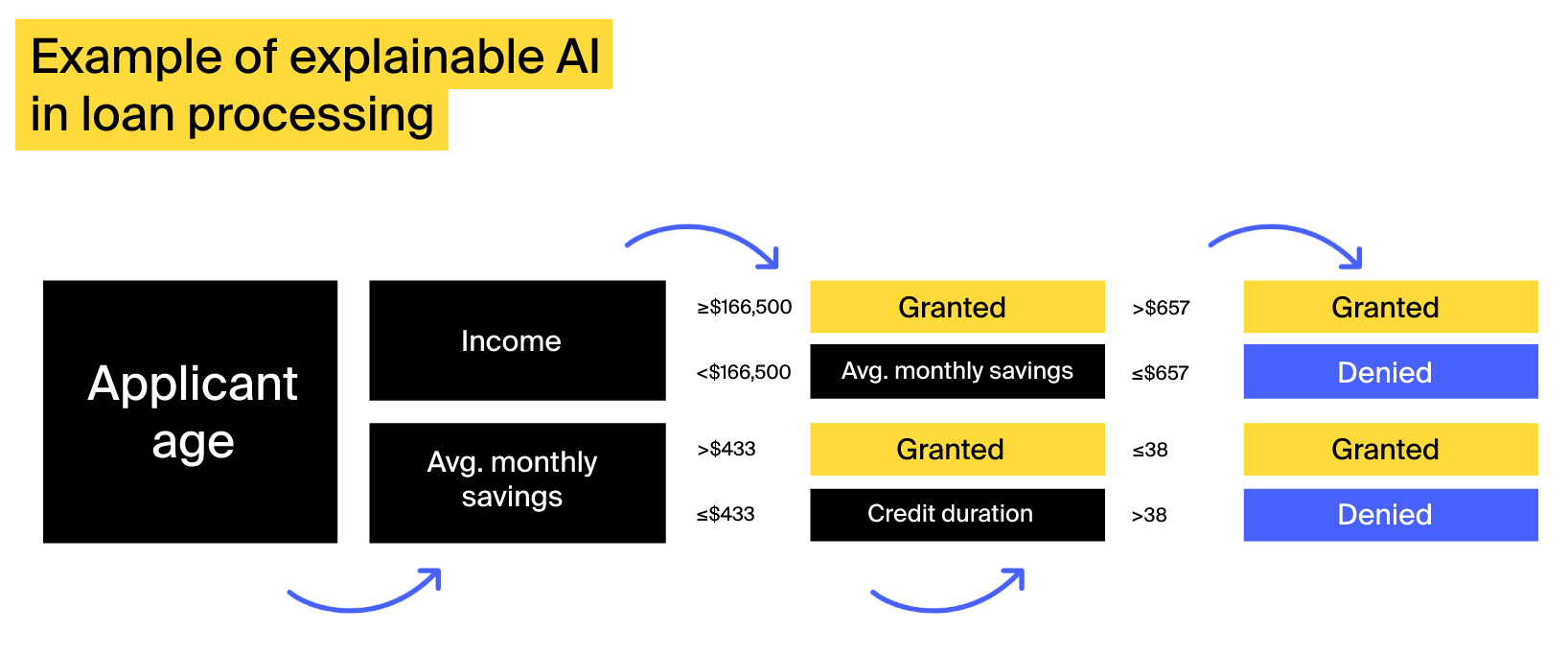

XAI can provide a detailed model-level explanation of why it made a particular decision. This explanation comes in a set of understandable rules. Following a simplified loan application example below, when applicants are denied a loan, they will receive a straightforward justification: everyone who is over 40 years old, saves less than $433 per month, and applies for a credit with a payback period of over 38 years will be denied a loan. The same goes for younger applicants who save less than $657 per month.

Why is explainable AI important?

In some industries, explanations are required for AI algorithms to be accepted. This can be either due to regulations and/or human factors. Think about brain tumor classification. No doctor will recommend a surgery to a patient solely on the basis that “the algorithm said so.” And what about loan issuance? Clients who got their application denied would want to understand why. Yes, there are more tolerant use cases where an explanation is not essential. For instance, predictive maintenance applications are not a matter of life or death, but even then, employees would feel more confident knowing why particular equipment might need preemptive repair.

Senior management often understands the value of AI applications, but they also have their concerns. According to Gaurav Deshpande, VP of Marketing at TigerGraph, there is always a “but” in executives’ reasoning: “…but if you can’t explain how you arrived at the answer, I can’t use it. This is because of the risk of bias in the black box AI system that can lead to lawsuits and significant liability and risk to the company brand as well as the balance sheet.”

The ideal XAI solution is one that is reasonably accurate and can explain its recommendations to practitioners, executives, and end users. Incorporating explainable AI principles into intelligent software:

-

Brings relief to system users. They understand the reasoning and can get behind the decisions being made. For example, a loan officer will be more comfortable informing a customer their loan application has been denied if he understands how the decision was made.

-

Ensures compliance. By verifying the provided explanation, users can see whether the algorithm’s rules are sound and in accordance with the law and ethics.

-

Allows for system optimization. When designers and developers see the explanation, they can spot reasoning inconsistencies and fix them.

-

Eliminates bias. When users view the explanation, they can detect instances of biased judgment, override the system’s decision, and correct the algorithm to avoid similar scenarios in the future.

-

Empowers employees to act upon the system’s output. For example, an XAI might predict that a particular corporate customer will not renew their software license. The first reaction of the manager can be to offer a discount. But what if the reason behind leaving was poor customer service? The system’s explanation will shed light on the problem.

Which industries need XAI the most?

- Healthcare

- Finances

- Automotive

- Manufacturing

1. Explainable AI in healthcare

AI has many applications in healthcare. Various AI-powered medical solutions can save doctors’ time on repetitive tasks, allowing them to focus on patient-facing care. Additionally, algorithms are good at diagnosing various health conditions, as they can be trained to spot subtle details that escape the human eye. However, when doctors cannot explain the outcome, they are hesitant to use this technology and act on its recommendations.

For instance, XAI used in breast cancer detection can generate heatmaps, highlighting suspicious areas in a mammogram. Such transparency allows radiologists to see the models’ reasoning and have more confidence in their diagnosis.

In a recent real-life example—the first of its kind—an international research team used explainable artificial intelligence to predict ICU patients’ length of stay and improve hospital bed management. The scientists used supervised machine learning models to forecast long and short ICU stay periods and explain how they were calculated, achieving 98% accuracy.

2. XAI in finances

Finance is another heavily regulated industry where algorithmic decisions need to be explained. Where it’s vital that AI-powered solutions are auditable; otherwise, they will struggle to enter the market.

AI can help assign credit scores, assess insurance claims, and optimize investment portfolios, among other applications. However, if the algorithms provide biased output, it can result in reputational loss and even lawsuits.

With explainable AI, one can avoid scandalous situations by justifying the output. That’s why reputable financial institutions are striving to incorporate explainable artificial intelligence into their practices and financial software. For instance, J.P. Morgan Chase established an Explainable AI Center of Excellence that ensures explainability and fairness of the company’s AI initiatives.

There are also ready-made tools that financial organizations can use. One example is nCino Banking Advisor—an XAI-powered solution that can perform a range of tasks from asset portfolio management to drafting narratives and filling documents. This tool can explain its calculations and reasoning, making it safer for banking managers to adopt its recommendations.

3. Explainable AI in the automotive industry

Autonomous vehicles operate on vast amounts of data, requiring AI to analyze and make sense of it all. And just like in the two previous sectors, the system’s decisions need to be transparent for all involved parties, including drivers, technologists, authorities, and insurance companies.

Specifically, it is crucial to understand how vehicles will behave in case of an emergency. Here is how Paul Appleby, former CEO of a data management software company, Kinetica, voiced his concern: “If a self-driving car finds itself in a position where an accident is inevitable, what measures should it take? Prioritize the protection of the driver and put pedestrians in grave danger? Avoid pedestrians while putting the passengers’ safety at risk?”

These are tough questions to answer, and people would disagree on how to handle such situations. But it’s important to set guidelines that the algorithm can follow in such cases. This will help passengers decide whether they are comfortable traveling in a car designed to make certain decisions.

4. Explainable artificial intelligence in manufacturing

AI has many applications in manufacturing, including predictive maintenance, inventory management, logistics optimization, process quality improvement, and more.

Heena Purohit, Senior Product Manager for IBM Watson IoT, explains how their AI-based maintenance product approaches explainable AI. The system offers human employees several options on how to repair a piece of equipment. Every option includes a percentage confidence interval. So, the user can still consult their “tribal knowledge” and expertise when making a choice. Also, each recommendation can project the knowledge graph output together with the input used in the training phase.

In another example, a research team experimented with XAI to improve the quality of semiconductor manufacturing. They deployed nonlinear modeling and Shapely additive explanations to identify a set of production parameters that can enhance the quality of the manufacturing process. The tool could also justify this choice to convince the management.

Challenges on the way to explainable AI

If you are planning to implement or purchase an XAI solution, you can encounter the following challenges, even if you adhere to the four explainable AI principles.

Challenge 1: The need to compromise on the predictive power

Black box algorithms, such as neural networks, have high predictive power but offer no output justification. As a result, users need to blindly trust the system, which can be hard in certain circumstances. White box AI offers the much-needed explainability, but its algorithms need to remain simple, compromising on predictive power.

For example, AI has applications in radiology where algorithms produce remarkable results, classifying brain tumors and spotting breast cancer faster than humans. However, when doctors decide on patients’ treatment, which can be a life-and-death situation, they want to understand why the algorithm came up with this diagnosis. It can be daunting for doctors to rely on something they do not understand.

Challenge 2: Interpretability vs. usability for end users

Explainable AI principles state that the explanation needs to be both accurate and understandable to the target audience. But it’s difficult to find the right balance. Technical explanations may overwhelm end users, while overly simplified ones risk omitting critical details. This trade-off makes it difficult to cater to diverse audiences, including regulators, business leaders, and end customers.

Challenge 3: Security and privacy issues

With XAI, if malicious actors gain access to the algorithm’s decision-making process, they might apply adversarial behaviors, meaning they will take deliberate actions to change their behavior to influence the output. One study published a concern that someone with technical skills can recover parts of the dataset used for algorithm training after seeing the explanation, violating privacy regulations.

How to get started with responsible and explainable AI

You can follow the four steps below to ensure responsible implementation and usage of explainable artificial intelligence.

Step 1: Define what needs to be explained and identify the audience

Start by determining the specific aspects of your AI system that require explainability. Is it the rationale behind a single decision, the overall functioning of the model, or both? Tailor your efforts to the audience—technical users, such as data scientists, may require detailed explanations, while non-technical stakeholders, such as business leaders or end users, may prefer simplified insights. Clarify whether you need unique explanations for each output or if a general explanation suffices across use cases. This step ensures your explainability strategy is both effective and audience-appropriate.

Step 2: Decide on the degree of explainability

Not all AI applications demand the same level of interpretability. Use criticality components, such as decision impact, control level, risks, regulations, reputation, and rigor, to assess the desired explainability level. For example, high-stakes applications like medical diagnosis require in-depth explainability, whereas less critical systems, like movie recommendations, may not. This assessment helps prioritize resources and ensures the chosen explainability level aligns with the application’s requirements and risks.

Step 3: Integrate ethical data practices and conduct regular audits

Even after implementing explainable AI, it is crucial to maintain ethical data usage and regularly audit your algorithms. Strong explainability features can help identify and address biases or inaccuracies in your models. Periodic audits ensure that the system continues to operate transparently, equitably, and without harm to stakeholders. Consider subscribing to frameworks like the Partnership on AI consortium or creating your own ethical guidelines to facilitate XAI adoption.

Step 4: Upskill your team and foster collaboration

Ensure that your team, from developers to business leaders, has the knowledge and skills needed to work effectively with explainable AI systems. Provide training on explainability tools, ethical considerations, and regulatory requirements. Encourage collaboration between data scientists, domain experts, and non-technical stakeholders to create a shared understanding of the AI system. This collaborative approach ensures that explainable AI is effectively integrated into workflows and decision-making processes.

On an end note

Implementing traditional AI is full of challenges, let alone its explainable and responsible version. Despite the obstacles, it will bring relief to your employees, who will be more motivated to act upon the system’s recommendations when they understand the rationale behind it. Moreover, XAI will help you comply with your industry’s regulations and ethical considerations.

At ITRex, we specialize in helping organizations navigate the intricate landscape of explainable AI. With our proven expertise in AI strategizing, development, and deployment, we can guide you in building AI solutions that are not only powerful but also transparent, fair, and aligned with your stakeholders’ expectations.