In this article, our generative AI consulting company will explain how the technology can support healthcare organizations.

Generative AI use cases in healthcare

What is generative AI, and how is it different from traditional AI?

Generative AI in healthcare is a transformative technology that synthesizes complex, unstructured data—from electronic health records and medical imagery to physician notes—to create actionable clinical insights. It goes beyond simple analysis by generating nuanced summaries, simulating treatment outcomes, and accelerating the discovery of new diagnostic pathways.

You can learn more about the concept by referring to our recent guide on generative AI vs. classic AI.

Here are the most prominent generative AI use cases in healthcare:

-

Enhancing clinical competence

-

Augmenting clinical decision-making

-

Facilitating drug development

-

Automating administrative tasks

-

Accelerating research with synthetic medical data

Enhancing clinical competence

Generative AI in healthcare can come up with realistic simulations replicating a large variety of health conditions, allowing medical students and professionals to practice in a risk-free, controlled environment. AI can generate patient models with different diseases or help simulate a surgery or another medical procedure.

Traditional training involves pre-programmed scenarios, which are restrictive. AI, on the other hand, can quickly generate patient cases and adapt in real time, responding to the decisions the trainees make. This creates a more challenging and authentic learning experience.

Real-life examples

The University of Michigan built a generative AI in healthcare model that can produce various scenarios for simulating sepsis treatment.

The technology can also help medical students strengthen their communication skills. For instance, a UK-based Virti developed a virtual patient solution that allows medical professionals to improve remote clinical communication. They can practice sharing bad news, soothing upset family members, and explaining complex diagnoses.

Augmenting clinical decision-making

The American Medical Association came up with the term “augmented intelligence” to stress the role of AI as supporting, not replacing, clinical staff. Here is how generative AI for healthcare can contribute to diagnostics:

-

Generating high-quality medical images. Hospitals can employ generative AI tools to enhance the traditional AI’s diagnostic abilities. This technology can convert poor-quality scans into high-resolution medical images with great detail, apply anomaly detection AI algorithms, and present the results to radiologists.

-

Diagnosing diseases. Researchers can train generative AI models on medical images, lab tests, and other patient data to detect and diagnose early onsets of different health conditions. These algorithms can spot skin cancer, lung cancer, hidden fractures, early signs of Alzheimer’s, diabetic retinopathy, and more. Additionally, AI models can unveil biomarkers that can cause particular disorders and predict disease progression.

-

Answering medical questions. Diagnosticians can turn to generative AI in healthcare if they have questions instead of looking for an answer in medical books. AI algorithms can process large amounts of data and generate answers fast, saving doctors’ precious time.

Real-life examples

Mass General Brigham researchers experimented with OpenAI models to detect childbirth-related posttraumatic stress disorder. The LLMs analyzed short, unstructured narratives from new mothers to spot the disorder before it escalates. The AI converted personal stories into data, uncovered hidden insights, and delivered a faster, more affordable, and widely accessible screening method.

In another example, a team of researchers experimented with generative adversarial network (GAN) models to extract and enhance features in low-quality medical scans, transforming them into high-resolution images. This approach was tested on brain MRI scans, dermoscopy, retinal fundoscopy, and cardiac ultrasound images, displaying a superior accuracy rate in anomaly detection after image enhancement.

Facilitating drug development

According to the Congressional Budget Office, the process of new drug development costs on average $1 billion to $2 billion, which also includes failed drugs. Fortunately, there is evidence that AI has the potential to cut the time needed to design and screen new drugs almost by half, saving the pharma industry around $26 billion in annual expenses in the process. Additionally, this technology can reduce costs associated with clinical trials by $28 billion per year.

How does generative AI help in designing new drugs? Gen AI in healthcare speeds up drug discovery by:

-

Designing and generating new molecules with desired properties that researchers can later evaluate in lab settings

-

Predicting properties of novel drug candidates and proteins

-

Generating virtual compounds with high binding affinity to the target that can be tested in computer simulations to reduce costs

-

Forecasting side effects of novel drugs by analyzing their molecular structure

You can find more information on the role of AI in drug discovery and how it facilitates clinical trials on our blog.

Real-life examples

Mass General Brigham experimented with Gen AI to screen patients for clinical trials. The researchers used a tailored GPT-4-based solution to scan patients’ medical records and clinical notes, searching for suitable candidates for heart failure-related clinical trials. The AI was 97.9% accurate in spotting the right patients.

Another interesting example comes from the University of Toronto. A research team built a generative AI system, ProteinSGM, that can generate novel realistic proteins after studying imagery representations of existing protein structures. This tool can produce proteins at a high rate, and then another AI model, OmegaFold, is deployed to evaluate the resulting proteins’ potential. Researchers reported that most of the novel generated sequences fold into real protein structures.

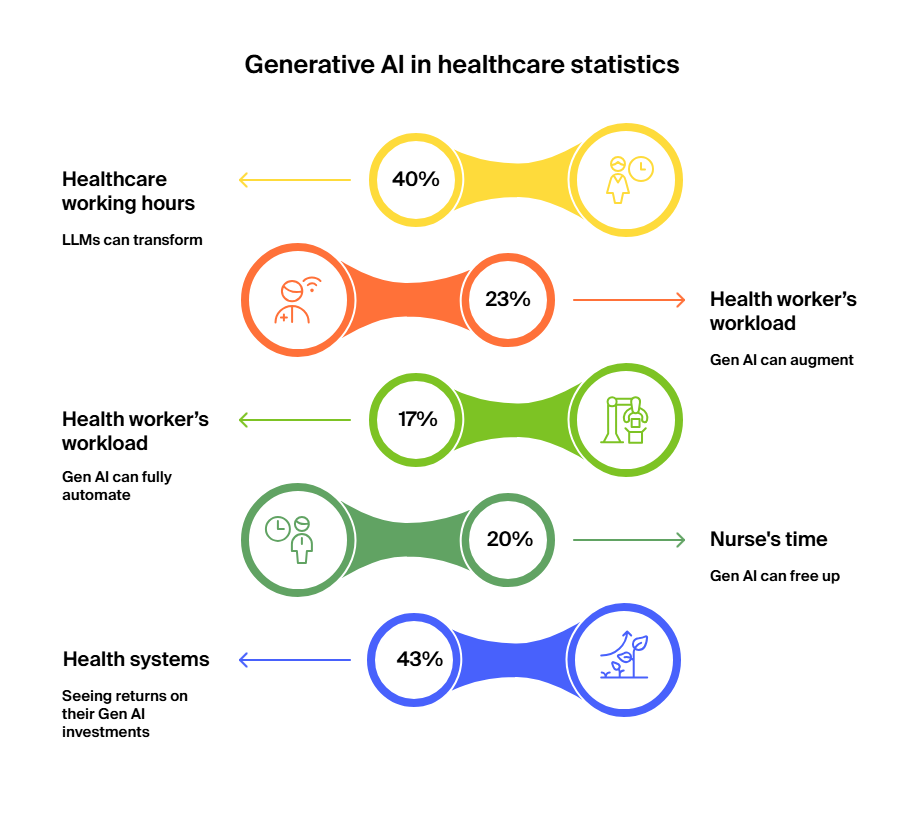

Automating administrative tasks

This is one of the most prominent generative AI use cases in healthcare. Studies show that the burnout rate among physicians in the US has reached a whopping 62%. Doctors suffering from this condition are more likely to be involved in incidents endangering their patients and are more inclined to alcohol abuse and having suicidal thoughts.

Fortunately, generative AI in healthcare can partially alleviate the burden off the doctors’ shoulders by streamlining administrative tasks. It can simultaneously reduce costs associated with administration, which, according to HealthAffairs, accounts for 15%-30% of overall healthcare spending. Here is what generative AI can do:

-

Extract data from patients’ medical records and populate the corresponding health registries. Microsoft is planning to integrate generative AI into Epic’s EHR. This tool will perform various administrative tasks, such as replying to patient messages.

-

Transcribe and summarize patient consultations, fill this information into the corresponding EHR fields, and produce clinical documentation. Microsoft’s Nuance integrated generative AI tech GPT-4 into its clinical transcription software. Doctors can already test the beta version.

-

Generate structured health reports by analyzing patient information, such as medical history, lab results, scans, etc.

-

Answer doctors’ queries

-

Find optimal time slots for appointment scheduling based on patients’ needs and doctors’ availability

-

Generate personalized appointment reminders and follow-up emails

-

Review medical insurance claims and predict which ones are likely to be rejected

-

Compose surveys to gather patient feedback on different procedures and visits, analyze it, and produce actionable insights to improve care delivery

Real-life examples

Can generative AI reduce physician burnout by automating paperwork? Let’s see.

Kaiser Permanente used generative AI in healthcare to record, transcribe, and summarize patient consultations. The initiative saved doctors an equivalent of five years’ worth of working hours. It also increased physician and patient satisfaction.

Since Epic integrated Gen AI into its EHRs, the University Medical Center Groningen was among the first health institutions to adopt this solution. Now the university relies on it to create patient summaries, draft responses to patient inquiries, and otherwise augment doctor-patient communications.

Accelerating research with synthetic medical data

Medical research relies on accessing vast amounts of data on different health conditions. This data is painfully lacking, especially when it comes to rare diseases. Also, such data is expensive to gather, and its usage and sharing are governed by privacy laws.

Generative AI in medicine can produce synthetic data samples that can augment real-life health datasets and are not subject to privacy regulations, as the healthcare data doesn’t belong to particular individuals. Artificial intelligence can generate EHR data, scans, etc.

Real-life examples

A team of German researchers built an AI-powered model, GANerAid, to generate synthetic patient data for clinical trials. This model is based on the GAN approach and can produce medical data with the desired properties even if the original training dataset was limited in size.

In a recent study, a research team demonstrated how LLMs can improve Synthea, the leading open-source synthetic health data generator. Instead of relying on manual, time-intensive work to build disease modules, researchers used LLMs to generate new disease profiles, draft and refine modules, and evaluate them for clinical accuracy. In addition to accelerating data generation, Gen AI also broadened the diversity of synthetic patient data and improved its quality.

Ethical considerations and challenges of generative AI in healthcare

We often see how prominent AI experts, including Tesla CEO Elon Musk and OpenAI CEO Sam Altman, warn of the risks associated with the technology. So, which challenges does generative AI bring to healthcare?

-

Bias. AI models’ performance is as good as the dataset they were trained on. If the data does not fairly represent the target population, this will leave room for bias against less represented groups. As generative AI tools train on vast amounts of patient records data, they will inherit any bias present there, and it will be a challenge to detect, let alone eradicate it.

-

Regulations. Are there any regulations governing the use of generative AI in healthcare? There are several laws that protect patient data, such as GDPR in Europe and HIPAA in the US. They enforce privacy, security, and compliance.

The more recent and AI-specific regulation—the EU AI Act—came into force on the 1st of August, 2024. It establishes a risk-based regulatory framework for the use, development, and deployment of AI across all sectors, including healthcare. This act classifies AI systems by risk: unacceptable, high, limited, and minimal. Healthcare-related applications, especially those involved in diagnosis, treatment, or emergency triage, fall into the high-risk category. Healthcare facilities operating in the EU have to implement rigorous validation processes to comply.

There is no equivalent regulation in the US. The Biden Administration’s Executive Order on Safe, Secure, and Trustworthy Development and Use of AI introduces some broad AI governance principles, but not on the same scale

-

Accuracy concerns. AI does make mistakes, and in healthcare, the price of such mistakes is rather high. For instance, LLMs can hallucinate. Meaning they can produce syntactically probable outcomes that are factually incorrect. Healthcare organizations will need to decide when to tolerate errors and when to require the AI model to explain its output. For instance, if generative AI is used to assist in cancer diagnosis, doctors are unlikely to adopt such a tool if it can’t justify its recommendations.

-

Accountability. Who is responsible for the final health outcome? Is it the doctor, the AI vendor, the AI developers, or yet another party? Lack of accountability can have a negative impact on motivation and performance.

Ready to enhance your healthcare practice with generative AI?

Generative AI algorithms are becoming increasingly powerful. Robert Pearl, a clinical professor at Stanford University School of Medicine, said:

“ChatGPT is doubling in power every six months to a year. In five years, it’s going to be 30 times more powerful than it is today. In 10 years, it will be 1,000 times more powerful. What exists today is like a toy. In next-generation tools, it’s estimated there will be a trillion parameters, which is interestingly the approximate number of connections in the human brain.”

AI can be a powerful ally, but if misused, it can cause significant damage. Healthcare organizations need to approach this technology with caution. If you are considering deploying AI-based solutions for healthcare, here are three tips to get you started:

-

Prepare your data. Even if you decide to opt for a pre-trained, ready-made Gen AI model, you might still want to retrain the LLM on your proprietary dataset, which needs to be of high quality and representative of the target population. Keep medical data secure at all times and safeguard patient privacy. It would be useful to disclose which dataset an algorithm was trained on, as it helps to understand where it will perform well and where it might fail.

-

Take control of your AI models. Cultivate the concept of responsible AI in your organization. Make sure people know when and how to use the tools and who assumes responsibility for the final outcome. Test the generative AI models on use cases with limited impact before scaling to more sensitive applications. As mentioned earlier, generative AI can make mistakes. Decide where a small failure rate is acceptable and where you can’t afford it. For instance, 98% accuracy can suffice in administrative applications, but it’s unacceptable in diagnostics and patient-facing practices. Devise a framework that will govern the use of generative AI in healthcare at your hospital.

To reduce hallucination in LLMs, you can deploy retrieval-augmented generation (RAG). This AI architecture dynamically extracts relevant, recent information from different knowledge sources to augment a standard LLM’s response.

-

Help your employees accept the technology and use it. AI still needs human guidance, especially in the heavily regulated healthcare sector. Human-in-the-loop remains an essential ingredient for the technology to succeed. The medical and administrative staff will be expected to supervise AI models, so hospitals need to focus on training people for this task. Employees, on the other hand, should be able to reinvent their daily routine, now that AI is a part of it, to use the freed-up time to produce value.

-

Find a reliable Gen AI development partner who will assist you every step of the way. At ITRex, we offer an AI/Gen AI readiness assessment to make sure your company can take full advantage of the technology. We also offer an AI proof of concept (PoC) service that allows you to experiment with AI on a small scale before committing your resources to a full-blown project.

FAQs

-

How does generative AI in healthcare assist in diagnosing diseases like cancer or Alzheimer’s?

Generative AI in healthcare can analyze large volumes of medical data, such as imaging scans, lab results, and patient histories, and highlight patterns that may indicate early signs of diseases like cancer or Alzheimer’s. It doesn’t replace doctors but acts as a powerful support tool, helping clinicians detect conditions earlier, make more accurate diagnoses, and personalize care plans.

-

Is it safe to let AI respond to patient messages or generate treatment plans?

Gen AI in healthcare can help draft responses and suggest treatment options, but it should never operate without human oversight. Clinicians must review and approve all AI-generated outputs to ensure accuracy, safety, and alignment with medical guidelines. Used this way, AI can save time without putting patients at risk.

-

What are the risks of bias in generative AI models trained on patient data?

If the data used to train AI systems is incomplete or not diverse enough, the models may produce biased results. This could mean underdiagnosing or misdiagnosing certain patient groups. To reduce this risk, healthcare organizations must use high-quality, representative data and regularly test AI systems for fairness.

-

How can hospitals ensure responsible use of AI tools?

Hospitals should establish clear governance frameworks that include regular audits, data quality checks, and compliance with regulations such as GDPR, HIPAA, and the EU AI Act. Medical facilities should also dedicate time to training staff on how to use AI responsibly and be transparent with patients about how AI contributes to their care.

-

Is human oversight still necessary when using generative AI?

Yes. AI can support clinical decisions, but it can’t replace human judgment. Oversight ensures that recommendations are interpreted correctly, ethical considerations are applied, and patient safety is protected. In healthcare, AI works best as a partner—not a substitute—for skilled professionals.